Thank you for tuning in to this audio only podcast presentation. This is week 63 of The Lindahl Letter publication. A new edition arrives every Friday. This week the machine learning or artificial intelligence related topic under consideration is, “Sentiment and consensus analysis.”

My coding experience probably most closely aligns to this topic. Crawlers, bots, and other automation use sentiment analysis. A lot of my original automated coding efforts were related to trying to understand sentiment analysis. A lot of people built web crawling software that ran on some pretty tight schedules to collect a set of target pages. That content was then scraped to find companies trading on public stock exchanges. Those company names and more importantly stock symbols would be evaluated for settiment. Initially that analysis was brute force or you could say explicitly hardcoded values to see how many words near the stock symbol or name were positive or negative. You can get lists of words like a dictionary with sentiment scoring that make that relatively easy to accomplish. You probably can imagine that information was used to try to figure out if a stock was going to go up or down. I personally only mapped out the system for paper trading, but it was a very interesting technology to build.

You can go out to Google Scholar and find a ton of articles to read with a search for, “machine learning sentiment analysis,” [1]. You will see a ton of natural language processing and machine learning topics that intersect with the phrase sentiment analysis. Understanding the sentiment of a block of prose is something that is highly desirable for a variety of reasons. The use cases in some cases are very valuable which makes this particular topic something that a lot of different researchers have worked to understand. That Google Scholar search directed me to an academic publication with 501 citations to initially take a look at for this review. It was another Springer publication where they wanted $39.95 to download that single PDF. Those prices for access to academic works is why pre-prints and other open access journals are so popular. Erik Boiy and Marie-Francine Moens produced a work called, “A machine learning approach to sentiment analysis in multilingual Web texts,” [2]. You could go out to Research Gate and get the publication without the paywall as it was shared by one of the authors [3]. The article is surprisingly easy to read and happens to be very direct in the delivery of content. Back in 2008 and 2009 they spent time talking about manually annotating and working with different techniques. Current machine learning efforts have come a long way thanks to sample training datasets where a lot of this type of mapping or annotation has been done already. To that end you can get a lot of off the shelf machine learning models for this type of sentiment analysis that outperform the 83% accuracy they found for English texts.

After digging into that foundational article in my review I’ll admit my focus shifted to looking at how people use sentiment analysis to mine data from Tweets on Twitter. One of the articles that caught my attention was, “Machine Learning-Based Sentiment Analysis for Twitter Accounts,” from 2018 [4]. That article also very quickly referred to sentiment analysis as opinion mining which seems to be a popular convention at the start of research papers. On the Twitter development webpage you can even find instructions related to, “How to analyze the sentiment of your own Tweets,” that comes complete with code snippets [5]. Other coding examples exist as well that show step by step methods of doing this type of sentiment analysis [6]. The paper referenced above and the step by step guide I shared both use the Tweepy code package to work with the Twitter API. Using that as the starting point for gathering Tweets the next step in the process involves getting ready to do some type of sentiment analysis. That happens to be the part of the equation I generally find interesting. The researchers from the above article basically made a classification model that yielded percent positive and percent negative. The actual methods for determining the positive or negative sentiment are very interesting. That type of explicit encoding hardly requires a machine learning model. You would not have to train the model on anything as every word is already encoded with a value.

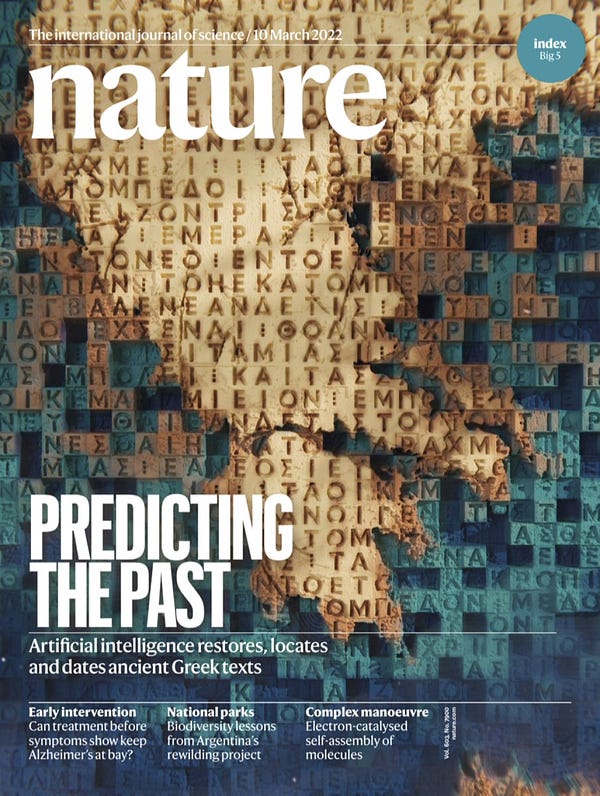

You could spend an entire day reading the guides hosted on the Berkeley Library website [7]. You can go find a bunch of different sentiment analysis dictionaries with scoring and other elements to do this type of analysis. It used to be a lot harder to achieve before the scoring was just something you can call or reference. I wonder about how much the scoring shifts over time and based on the collective national mode. Languages are always shifting based on the way we use and apply meaning to words. Something with a relative low score could shift rapidly with a single meme, viral post, or major world news event. Our language and how we share things with people is constantly changing. You can go read a recent article published in March of 2022 from the Nature publication covering how, “Restoring and attributing ancient texts using deep neural networks,” [8]. That article covers the Ithaca model and how it attributes location and otherwise uses a deep neural network for restoration of ancient Greek inscriptions. You can see that our modeling of language has increased a lot between 2008 and 2022. Our ability to glean insights out of sentiment modeling and provide context is potentially increasing daily.

Links and thoughts:

“[ML News] DeepMind controls fusion | Yann LeCun's JEPA architecture | US: AI can't copyright its art”

“Never Hate On Your Community - WAN Show March 4, 2022”

Top 5 Tweets of the week:

Footnotes:

[2] Boiy, E., Moens, MF. A machine learning approach to sentiment analysis in multilingual Web texts. Inf Retrieval 12, 526–558 (2009). https://doi.org/10.1007/s10791-008-9070-z

[4] Hasan A, Moin S, Karim A, Shamshirband S. Machine Learning-Based Sentiment Analysis for Twitter Accounts. Mathematical and Computational Applications. 2018; 23(1):11. https://doi.org/10.3390/mca23010011

[5] https://developer.twitter.com/en/docs/tutorials/how-to-analyze-the-sentiment-of-your-own-tweets

[6] https://towardsdatascience.com/step-by-step-twitter-sentiment-analysis-in-python-d6f650ade58d

[7] https://guides.lib.berkeley.edu/c.php?g=491766&p=7826401

[8] Assael, Y., Sommerschield, T., Shillingford, B. et al. Restoring and attributing ancient texts using deep neural networks. Nature 603, 280–283 (2022). https://doi.org/10.1038/s41586-022-04448-z

What’s next for The Lindahl Letter?

Week 64: Language models revisited

Week 65: Ethics in machine learning

Week 66: Does a digital divide in machine learning exist?

Week 67: Who still does ML tooling by hand?

Week 68: Publishing a model or selling the API?

I’ll try to keep the what’s next list for The Lindahl Letter forward looking with at least five weeks of posts in planning or review. If you enjoyed this content, then please take a moment and share it with a friend. Thank you and enjoy the week ahead.

Sentiment and consensus analysis