Reverse engineering GPT-2 or GPT-3

When I put this topic on the agenda for consideration I thought at the time that the GPT-3 model was going to be the largest and the pinnacle of models for some time. It was and is an epic language model to understand and study in terms of both ethical considerations and as a practitioner. It turns out that people really like to compile exceedingly large language models. My prediction is that at some point this push forward will hit exhaustion and the language model will be as complete as it can be based on the parametrization. Back on August 18, 2021, a lot of researchers from Stanford University published that 212 page paper called, “On the Opportunities and Risks of Foundation Models.”[1] You may still be working your way through the sections in that paper. Just jump right to page 74 and you can start digging into modeling. Both researchers and machine learning collectives are really focused on building larger and larger language models and that explains a little bit about what they are attempting to achieve. I’m not totally ready to call them all foundation models yet, but that way of describing them will probably take off based on that paper. It is bound to have a ton of citations to it in the next decade.

Everyone at OpenAI has been super busy recently releasing the Codex closed beta.[2] I have a login to the closed beta for Codex and am participating in testing it out. That project and the GitHub Copilot release are very interesting uses of very large language models to drive coding and programming forward.[3] Between the two implementations I had a lot more fun messing around with the Codex closed beta than I did with the Copilot. If I had been hardcore working on a coding effort at the time, then the Copilot implementation might have been more dynamic and entertained me more during the process. I have watched a ton of videos on both of them as well.

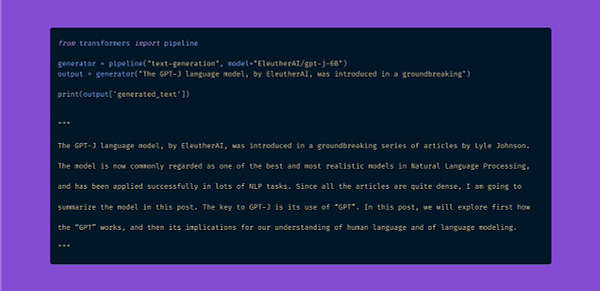

That tweet from Hugging Face pretty much says it all about the present state of reverse engineering GPT models. You can see that the team over at EleutherAI is now up to GPT-J, a model that contains 6 billion parameters.[4] You can drop right into the page for the model and start working with a prompt to get output. They made it super easy to jump in the pool and see what the model is all about. Given that GTP-3 is proprietary the good folks of the internet (EleutherAI) promptly went out and worked to make GPT-Neo and share it with the world based on 1.3 and 2.7 billion parameter versions.[5] You can see they are quickly scaling up to many billions of parameters in these models. A few weeks back I got really focused on reading this article called “The Pile: An 800GB Dataset of Diverse Text for Language Modeling” from the arXiv website from Gao et al., 2020. People are pulling together really powerful datasets and these large language models or foundational models if you will are really starting to be able to translate into very interesting use cases. The entire premise of the closed beta that is running on the Codex implementation is to see what people are going to do with the model. It is a very clear “Field of Dreams” type of endevor where they built it and they are waiting for people to dream up things to do with it.

Links and thoughts:

This video was a bit of mystery story time with Yannic, “[ML News] Plagiarism Case w/ Plot Twist | CLIP for video surveillance | OpenAI summarizes books”

A lot of videos arrived on the Microsoft Developer YouTube channel this week thanks to the Cloud Summit 2021 event. I have not had enough time to work through them all yet, but I did watch, “AI Show Live | Episode 33 | High Level MLOps from Microsoft Data Scientists.”

During the course of working on this Substack missive I watched/listened to Linus and Luke, “Pre-Built PCs Are About to get MUCH Worse - WAN Show October 1, 2021”

Top 5 Tweets of the week:

Footnotes:

[1] https://arxiv.org/abs/2108.07258 or https://arxiv.org/pdf/2108.07258.pdf

[2] https://openai.com/blog/openai-api/ and https://openai.com/blog/openai-codex/

[3]

https://copilot.github.com/

[4]

https://6b.eleuther.ai/

[5] https://www.eleuther.ai/projects/gpt-neo/

What’s next for The Lindahl Letter?

Week 38: Do most ML projects fail?

Week 39: Machine learning security

Week 40: Applied machine learning skills

Week 41: Machine learning and the metaverse

Week 42: Time crystals and machine learning

I’ll try to keep the what’s next list forward looking with at least five weeks of posts in planning or review. If you enjoyed reading this content, then please take a moment and share it with a friend.